Importance Sampling

This notebook will walk you through how to perform importance sampling using X-PSI

Performing Importance Sampling with X-PSI

Importance sampling (calculating new posterior distribution based on the already existing samples) can be performed using X-PSI under the following circumstances:

Model Update:

The model itself has changed, which implies a modification of the likelihood function.

However, note that the importance sampling results become less accurate for larger likelihood changes.

Change in Parameter Priors:

The prior for one or more parameters has been modified.

However, the new priors must still lie within the hypervolume of the original prior distribution, and the original sampling needs to have produced enough samples around the likeliest region of the new prior.

Both Changes:

Both the prior and the model have been updated simultaneously.

At the end of the notebook, you can find how to recompute the evidence after importance sampling is performed

1. Model Update

For the new model, we changed the spin frequency from 314.0 Hz (which was used to generate the data) to 310.0 Hz (this is technically not the correct model, it’s just an example). One reason to adjust only the frequency in the model while keeping the data unchanged could arise from inadvertently using a frequency different from the one used to fold the data, only to realize this discrepancy after performing the run (with the wrong frequency).

[1]:

import math

import xpsi

from xpsi.Sample import importance

from xpsi.global_imports import gravradius

import sys

from collections import OrderedDict

/=============================================\

| X-PSI: X-ray Pulse Simulation and Inference |

|---------------------------------------------|

| Version: 3.2.1 |

|---------------------------------------------|

| https://xpsi-group.github.io/xpsi |

\=============================================/

Imported emcee version: 3.1.6

Imported PyMultiNest.

Imported UltraNest.

Warning: Cannot import torch and test SBI_wrapper.

Imported GetDist version: 1.5.3

Imported nestcheck version: 0.2.1

[2]:

path="../../examples/examples_fast/"

sys.path.append(path)

[3]:

%%capture

# Let's import the old and the new models

from Modules import main as old_model # The spin in main is 314.0 Hz

from Modules import main_IS_likelihood as new_model # The spin in main_IS_likelihood is 310.0 Hz

[4]:

def derive_names(model):

out = str(model.likelihood)

outA = out.split('\n')

outA = outA[2:-1]

names = []

for i in range(len(outA)):

a = outA[i]

ind_end = a.find(':')

if a[:ind_end].find(' ')<0:

names.append(a[:ind_end])

return names

names = derive_names(old_model)

[5]:

names

[5]:

['mass',

'radius',

'distance',

'cos_inclination',

'p__phase_shift',

'p__super_colatitude',

'p__super_radius',

'p__super_temperature']

[ ]:

# Doing importance sampling

# Commenting this as it takes a bit of time to run

######################

# importance(new_model.likelihood,

# old_model.likelihood,

# '../../examples/examples_fast/Outputs/ST_live_1000_eff_0.3_seed42',

# names = names,

# likelihood_change = True,

# prior_change = False,

# weight_threshold=1.0e-30,

# overwrite=True)

Importance sampling commencing...

Cross-checking parameter names...

Loading samples...

Importance sample fraction = 84.817%.

Distributing workload amongst 1 MPI processes...

Renormalising weights...

normalisation constant = 1.0385084078737605

Writing to disk...

Restoring likelihood-object parameter update protocol attributes...

Importance sampling completed.

For evidence computation: normalisation constant = 1.0385084078737605

Running the cell above will generate a file called, ST_live_1000_eff_0.3_seed42_importance_sampled_likelihood_change.txt that contains the new posteriors.

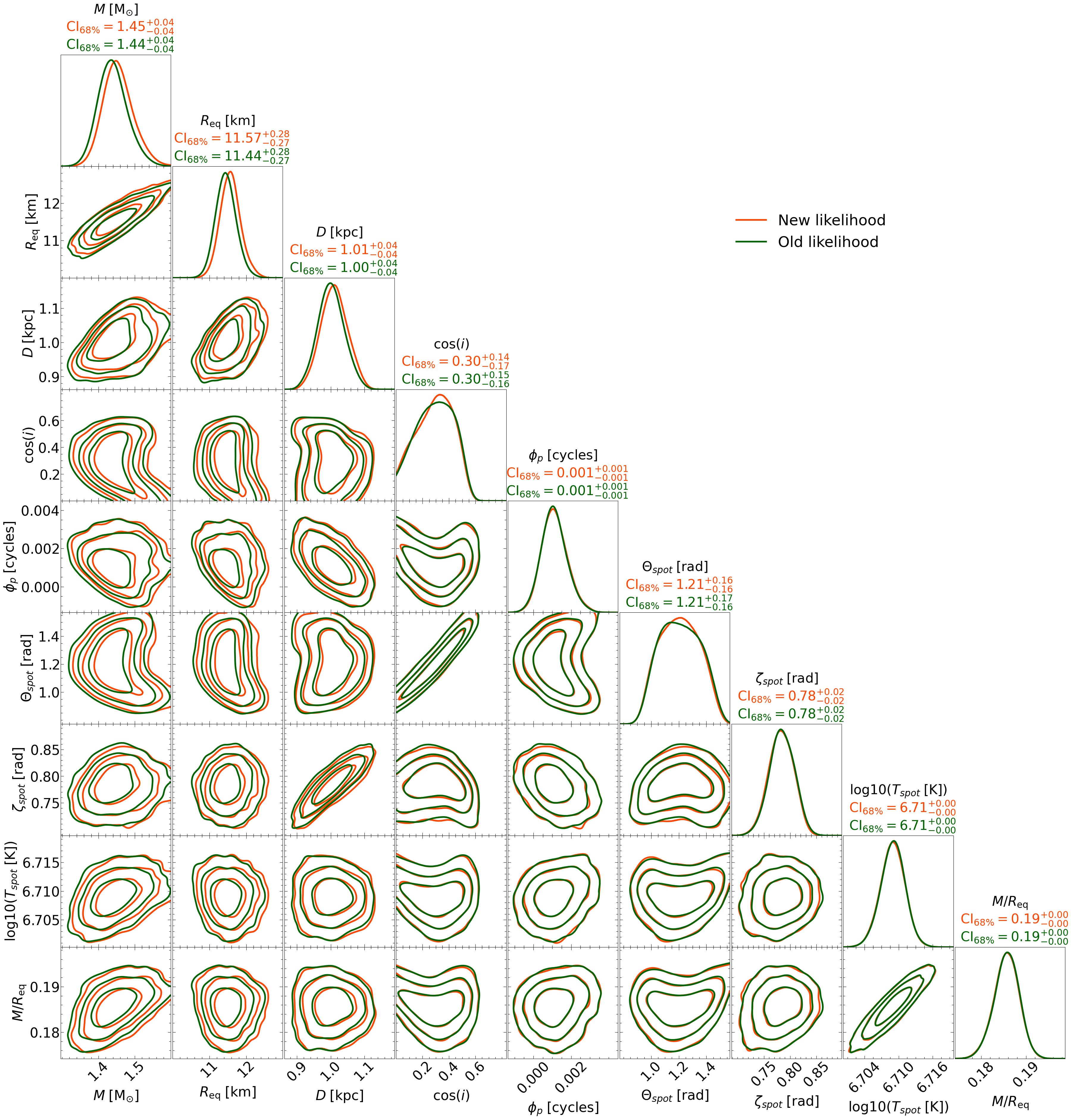

Now let’s plot what the new posteriors look like compared to the old ones.

[7]:

# Getdist settings

getdist_kde_settings = {'ignore_rows': 0,

'min_weight_ratio': 1.0e-10,

'contours': [0.683, 0.954, 0.997],

'credible_interval_threshold': 0.001,

'range_ND_contour': 0,

'range_confidence': 0.001,

'fine_bins': 1024,

'smooth_scale_1D': 0.4,

'num_bins': 100,

'boundary_correction_order': 1,

'mult_bias_correction_order': 1,

'smooth_scale_2D': 0.4,

'max_corr_2D': 0.99,

'fine_bins_2D': 512,

'num_bins_2D': 40}

[8]:

# Settings names, bounds and labels

old_model.names=['mass','radius','distance','cos_inclination','p__phase_shift',

'p__super_colatitude','p__super_radius','p__super_temperature']

# We will use the same bounds used during sampling

old_model.bounds = {'mass':(1.0,1.6),

'radius':(10,13),

'distance':(0.5,2.0),

'cos_inclination':(0,1),

'p__phase_shift':(-0.25, 0.75),

'p__super_colatitude':(0.001, math.pi/2 - 0.001),

'p__super_radius':(0.001, math.pi/2.0 - 0.001),

'p__super_temperature':(6., 7.)}

# Now the labels

old_model.labels = {'mass': r"M\;\mathrm{[M}_{\odot}\mathrm{]}",

'radius': r"R_{\mathrm{eq}}\;\mathrm{[km]}",

'distance': r"D \;\mathrm{[kpc]}",

'cos_inclination': r"\cos(i)",

'p__phase_shift': r"\phi_{p}\;\mathrm{[cycles]}",

'p__super_colatitude': r"\Theta_{spot}\;\mathrm{[rad]}",

'p__super_radius': r"\zeta_{spot}\;\mathrm{[rad]}",

'p__super_temperature': r"\mathrm{log10}(T_{spot}\;[\mathrm{K}])"}

old_model.names +=['compactness']

old_model.bounds['compactness']=(gravradius(1.0)/16.0, 1.0/3.0)

old_model.labels['compactness']= r"M/R_{\mathrm{eq}}"

[9]:

new_model.names = old_model.names

new_model.bounds = old_model.bounds

new_model.labels = old_model.labels

[10]:

precisions = {'mass': 2,

'radius': 2,

'distance': 2,

'cos_inclination': 2,

'p__phase_shift': 3,

'p__super_colatitude': 2,

'p__super_radius': 2,

'p__super_temperature': 2,

'compactness': 2}

[11]:

# Loading old run

old_model.runs = xpsi.Runs.load_runs(ID='Old likelihood',

run_IDs=['run'],

roots=['ST_live_1000_eff_0.3_seed42'],

base_dirs=['../../examples/examples_fast/Outputs/'],

use_nestcheck=[False],

kde_settings=getdist_kde_settings,

likelihood=old_model.likelihood,

names=old_model.names,

bounds=old_model.bounds,

labels=old_model.labels,

implementation='multinest',

precisions=precisions,

overwrite_transformed=True)

# Loading new run

new_model.runs = xpsi.Runs.load_runs(ID='New likelihood',

run_IDs=['run'],

roots=['ST_live_1000_eff_0.3_seed42_importance_sampled_likelihood_change'],

base_dirs=['../../examples/examples_fast/Outputs/'],

use_nestcheck=[False],

kde_settings=getdist_kde_settings,

likelihood=new_model.likelihood,

names=new_model.names,

bounds=new_model.bounds,

labels=new_model.labels,

implementation='multinest',

precisions=precisions,

overwrite_transformed=True)

Change output files to .npy to speed up post-processing

Change output files to .npy to speed up post-processing

[12]:

pp = xpsi.PostProcessing.CornerPlotter([old_model.runs, new_model.runs])

_ = pp.plot(

params=old_model.names,#["mass","radius"],

IDs=OrderedDict([('Old likelihood', ['run',]),('New likelihood', ['run',])]),

prior_density=False,

KL_divergence=True,

ndraws=5e4,

combine=False, combine_all=True, only_combined=False, overwrite_combined=True,

param_plot_lims={},

bootstrap_estimators=False,

bootstrap_density=False,

n_simulate=200,

crosshairs=False,

write=False,

ext='.png',

maxdots=3000,

root_filename='run',

credible_interval_1d=True,

annotate_credible_interval=True,

credible_interval_1d_all_show=True,

show_vband=None,

compute_all_intervals=False,

sixtyeight=True,

axis_tick_x_rotation=45.0,

num_plot_contours=3,

subplot_size=4.0,

legend_corner_coords=(0.675,0.8),

legend_frameon=False,

scale_attrs=OrderedDict([('legend_fontsize', 3.0),

('axes_labelsize', 2.35),

('axes_fontsize', 'axes_labelsize'),

]

),

colormap='Reds',

shaded=False,

shade_root_index=-1,

rasterized_shade=True,

no_ylabel=True,

no_ytick=True,

lw=4.0,

lw_1d=4.0,

filled=False,

normalize=True,

veneer=True,

line_colors=['darkgreen', "orangered"],

tqdm_kwargs={'disable': False},

lengthen=2.0,

embolden=1.0,

ci_gap=0.16,

nx=500)

Executing posterior density estimation...

Curating set of runs for posterior plotting...

Run set curated.

Constructing lower-triangle posterior density plot via Gaussian KDE:

plotting: ['mass', 'radius']

plotting: ['mass', 'distance']

plotting: ['mass', 'cos_inclination']

plotting: ['mass', 'p__phase_shift']

plotting: ['mass', 'p__super_colatitude']

plotting: ['mass', 'p__super_radius']

plotting: ['mass', 'p__super_temperature']

plotting: ['mass', 'compactness']

plotting: ['radius', 'distance']

plotting: ['radius', 'cos_inclination']

plotting: ['radius', 'p__phase_shift']

plotting: ['radius', 'p__super_colatitude']

plotting: ['radius', 'p__super_radius']

plotting: ['radius', 'p__super_temperature']

plotting: ['radius', 'compactness']

plotting: ['distance', 'cos_inclination']

plotting: ['distance', 'p__phase_shift']

plotting: ['distance', 'p__super_colatitude']

plotting: ['distance', 'p__super_radius']

plotting: ['distance', 'p__super_temperature']

plotting: ['distance', 'compactness']

plotting: ['cos_inclination', 'p__phase_shift']

plotting: ['cos_inclination', 'p__super_colatitude']

plotting: ['cos_inclination', 'p__super_radius']

plotting: ['cos_inclination', 'p__super_temperature']

plotting: ['cos_inclination', 'compactness']

plotting: ['p__phase_shift', 'p__super_colatitude']

plotting: ['p__phase_shift', 'p__super_radius']

plotting: ['p__phase_shift', 'p__super_temperature']

plotting: ['p__phase_shift', 'compactness']

plotting: ['p__super_colatitude', 'p__super_radius']

plotting: ['p__super_colatitude', 'p__super_temperature']

plotting: ['p__super_colatitude', 'compactness']

plotting: ['p__super_radius', 'p__super_temperature']

plotting: ['p__super_radius', 'compactness']

plotting: ['p__super_temperature', 'compactness']

Veneering spines and axis ticks...

Veneered.

Adding 1D marginal credible intervals...

Plotting credible regions for posterior Old likelihood...

Added 1D marginal credible intervals.

Adding 1D marginal credible intervals...

Plotting credible regions for posterior New likelihood...

Added 1D marginal credible intervals.

Constructed lower-triangle posterior density plot.

Posterior density estimation complete.

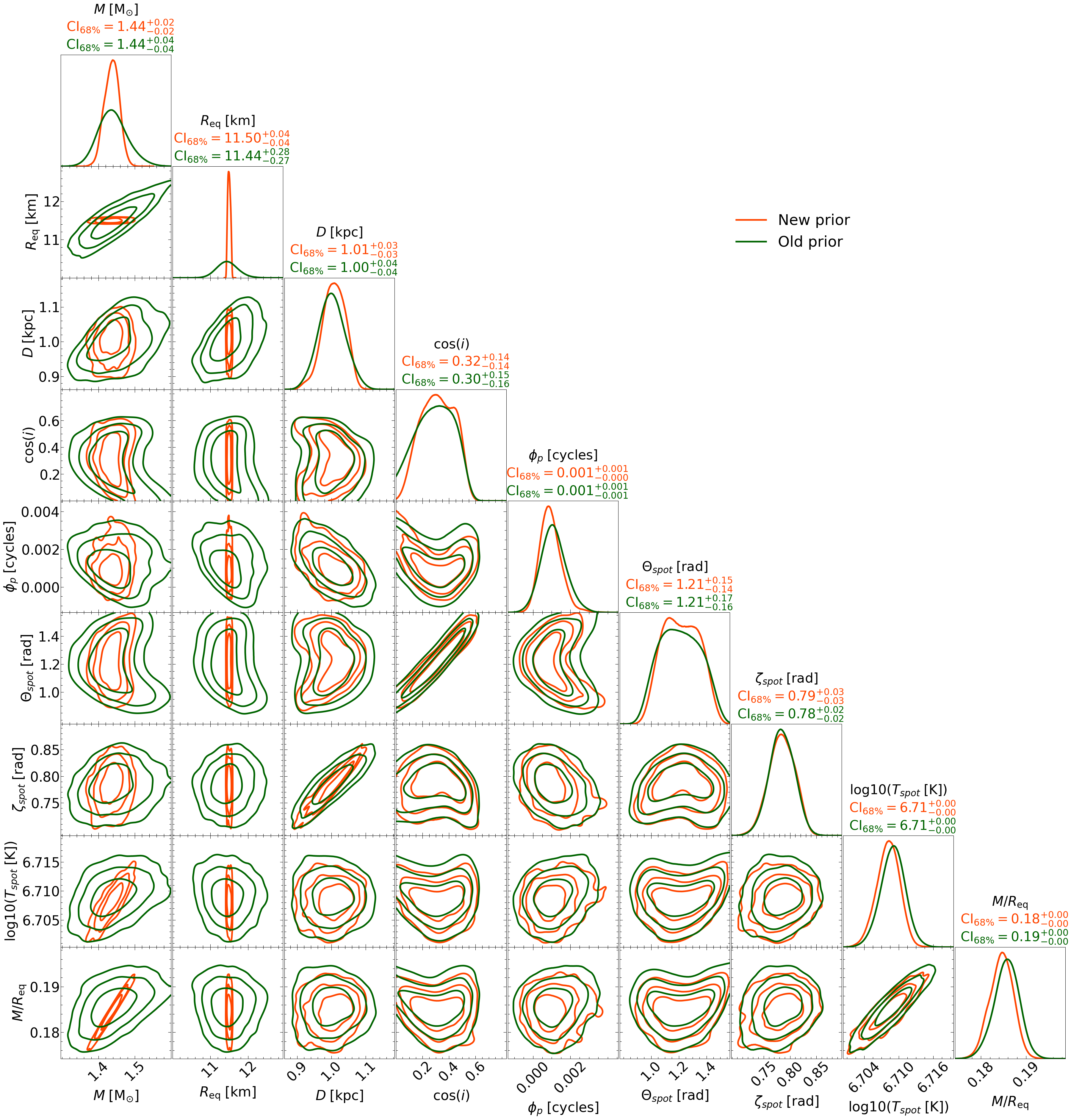

2. Prior Update

In the Module folder, we made a file called main_IS_prior.py. The model is the same, but the only change was the prior. In the CustomPrior_IS.py file, the mass prior changed (look at the density method) from :math:`M sim U(1., 1.6)` to :math:`M sim N(1.4, 0.01^2)`. The new mass distribution is truncated at :math:`2.5sigma`. The radius also changed from :math:`R_{mathrm{eq}} sim U(10., 13.)` to :math:`R_{mathrm{eq}} sim N(11.5, 0.01^2)`. The new radius distribution is truncated at :math:`1.5sigma`.

[12]:

%%capture

from Modules import main_IS_prior as new_model # The prior density has changed in CustomPrior_IS compared to CustomPrior

[13]:

# Doing importance sampling

importance(new_model.likelihood,

old_model.likelihood,

'../../examples/examples_fast/Outputs/ST_live_1000_eff_0.3_seed42',

names = names,

likelihood_change = False,

prior_change = True,

weight_threshold=1.0e-30,

overwrite=True)

Importance sampling commencing...

Cross-checking parameter names...

Loading samples...

Importance sample fraction = 84.817%.

Distributing workload amongst 1 MPI processes...

Renormalising weights...

normalisation constant = 13.30705930868669

Writing to disk...

Restoring likelihood-object parameter update protocol attributes...

Importance sampling completed.

For evidence computation: normalisation constant = 13.30705930868669

Running the cell above will generate a file called, ST_live_1000_eff_0.3_seed42_importance_sampled_prior_change.txt that contains the new posteriors.

Now let’s plot the new posteriors and compare them to the old ones.

[14]:

new_model.names = old_model.names

new_model.bounds = old_model.bounds

new_model.labels = old_model.labels

[15]:

old_model.runs = xpsi.Runs.load_runs(ID='Old prior',

run_IDs=['run'],

roots=['ST_live_1000_eff_0.3_seed42'],

base_dirs=['../../examples/examples_fast/Outputs/'],

use_nestcheck=[False],

kde_settings=getdist_kde_settings,

likelihood=old_model.likelihood,

names=old_model.names,

bounds=old_model.bounds,

labels=old_model.labels,

implementation='multinest',

precisions=precisions,

overwrite_transformed=True)

new_model.runs = xpsi.Runs.load_runs(ID='New prior',

run_IDs=['run'],

roots=['ST_live_1000_eff_0.3_seed42_importance_sampled_prior_change'],

base_dirs=['../../examples/examples_fast/Outputs/'],

use_nestcheck=[False],

kde_settings=getdist_kde_settings,

likelihood=new_model.likelihood,

names=new_model.names,

bounds=new_model.bounds,

labels=new_model.labels,

implementation='multinest',

precisions=precisions,

overwrite_transformed=True)

Change output files to .npy to speed up post-processing

Change output files to .npy to speed up post-processing

WARNING:root:outlier fraction 0.0027829313543599257

[17]:

pp = xpsi.PostProcessing.CornerPlotter([old_model.runs, new_model.runs])

_ = pp.plot(

params=old_model.names,#["mass","radius"],

IDs=OrderedDict([('Old prior', ['run',]),('New prior', ['run',])]),

prior_density=False,

KL_divergence=True,

ndraws=5e4,

combine=False, combine_all=True, only_combined=False, overwrite_combined=True,

param_plot_lims={},

bootstrap_estimators=False,

bootstrap_density=False,

n_simulate=200,

crosshairs=False,

write=False,

ext='.png',

maxdots=3000,

root_filename='run',

credible_interval_1d=True,

annotate_credible_interval=True,

credible_interval_1d_all_show=True,

show_vband=None,

compute_all_intervals=False,

sixtyeight=True,

axis_tick_x_rotation=45.0,

num_plot_contours=3,

subplot_size=4.0,

legend_corner_coords=(0.675,0.8),

legend_frameon=False,

scale_attrs=OrderedDict([('legend_fontsize', 3.0),

('axes_labelsize', 2.35),

('axes_fontsize', 'axes_labelsize'),

]

),

colormap='Reds',

shaded=False,

shade_root_index=-1,

rasterized_shade=True,

no_ylabel=True,

no_ytick=True,

lw=4.0,

lw_1d=4.0,

filled=False,

normalize=True,

veneer=True,

line_colors=['darkgreen', "orangered"],

tqdm_kwargs={'disable': False},

lengthen=2.0,

embolden=1.0,

ci_gap=0.16,

nx=500)

Executing posterior density estimation...

Curating set of runs for posterior plotting...

Run set curated.

Constructing lower-triangle posterior density plot via Gaussian KDE:

plotting: ['mass', 'radius']

plotting: ['mass', 'distance']

plotting: ['mass', 'cos_inclination']

plotting: ['mass', 'p__phase_shift']

plotting: ['mass', 'p__super_colatitude']

plotting: ['mass', 'p__super_radius']

plotting: ['mass', 'p__super_temperature']

plotting: ['mass', 'compactness']

plotting: ['radius', 'distance']

plotting: ['radius', 'cos_inclination']

plotting: ['radius', 'p__phase_shift']

plotting: ['radius', 'p__super_colatitude']

plotting: ['radius', 'p__super_radius']

plotting: ['radius', 'p__super_temperature']

plotting: ['radius', 'compactness']

plotting: ['distance', 'cos_inclination']

plotting: ['distance', 'p__phase_shift']

plotting: ['distance', 'p__super_colatitude']

plotting: ['distance', 'p__super_radius']

plotting: ['distance', 'p__super_temperature']

plotting: ['distance', 'compactness']

plotting: ['cos_inclination', 'p__phase_shift']

plotting: ['cos_inclination', 'p__super_colatitude']

plotting: ['cos_inclination', 'p__super_radius']

plotting: ['cos_inclination', 'p__super_temperature']

plotting: ['cos_inclination', 'compactness']

plotting: ['p__phase_shift', 'p__super_colatitude']

plotting: ['p__phase_shift', 'p__super_radius']

plotting: ['p__phase_shift', 'p__super_temperature']

plotting: ['p__phase_shift', 'compactness']

plotting: ['p__super_colatitude', 'p__super_radius']

plotting: ['p__super_colatitude', 'p__super_temperature']

plotting: ['p__super_colatitude', 'compactness']

plotting: ['p__super_radius', 'p__super_temperature']

plotting: ['p__super_radius', 'compactness']

plotting: ['p__super_temperature', 'compactness']

Veneering spines and axis ticks...

Veneered.

Adding 1D marginal credible intervals...

Plotting credible regions for posterior Old prior...

Added 1D marginal credible intervals.

Adding 1D marginal credible intervals...

Plotting credible regions for posterior New prior...

Added 1D marginal credible intervals.

Constructed lower-triangle posterior density plot.

Posterior density estimation complete.

This is it. That’s a very simple example.

Alternatively you can also use the tools found in xpsi/utilities/ImportanceSample.py to perform importance sampling.

It can be used e.g. in the following way:

[18]:

f = open("config_importance_sampling.ini", "w")

f.write("--name-original-main=main\n")

f.write("--name-importance-sample-main=main_IS_prior\n")

f.write("--path-to-mains=../../examples/examples_fast/Modules/\n")

f.write("--path-to-outputs=../../examples/examples_fast/Outputs/\n")

f.write("--output-root-name=ST_live_1000_eff_0.3_seed42\n")

f.write("--names=mass radius distance cos_inclination p__phase_shift p__super_colatitude p__super_radius p__super_temperature\n")

f.write("--likelihood-change=False\n")

f.write("--prior-change=True\n")

f.write("--weight-threshold=1e-30\n")

f.write("--overwrite=True\n")

f.close()

[19]:

!python ../../xpsi/utilities/ImportanceSample.py @config_importance_sampling.ini

WARNING: Please be aware that ERRORS can arise if relative paths are used in the main.py file (to point to the config file) or in the config.ini file (to point to other files used in the analysis).

/=============================================\

| X-PSI: X-ray Pulse Simulation and Inference |

|---------------------------------------------|

| Version: 3.0.0 |

|---------------------------------------------|

| https://xpsi-group.github.io/xpsi |

\=============================================/

Imported emcee version: 3.1.6

Imported PyMultiNest.

Imported UltraNest.

Warning: Cannot import torch and test SBI_wrapper.

Imported GetDist version: 1.5.3

Imported nestcheck version: 0.2.1

Setting channels for event data...

Channels set.

Setting channels for loaded instrument response (sub)matrix...

Channels set.

No parameters supplied... empty subspace created.

Creating parameter:

> Named "phase_shift" with fixed value 0.000e+00.

> The phase shift for the signal, a periodic parameter [cycles].

Creating parameter:

> Named "frequency" with fixed value 3.140e+02.

> Spin frequency [Hz].

Creating parameter:

> Named "mass" with bounds [1.000e+00, 1.600e+00].

> Gravitational mass [solar masses].

Creating parameter:

> Named "radius" with bounds [1.000e+01, 1.300e+01].

> Coordinate equatorial radius [km].

Creating parameter:

> Named "distance" with bounds [5.000e-01, 2.000e+00].

> Earth distance [kpc].

Creating parameter:

> Named "cos_inclination" with bounds [0.000e+00, 1.000e+00].

> Cosine of Earth inclination to rotation axis.

Creating parameter:

> Named "super_colatitude" with bounds [1.000e-03, 1.570e+00].

> The colatitude of the centre of the superseding region [radians].

Creating parameter:

> Named "super_radius" with bounds [1.000e-03, 1.570e+00].

> The angular radius of the (circular) superseding region [radians].

Creating parameter:

> Named "phase_shift" with bounds [-2.500e-01, 7.500e-01].

> The phase of the hot region, a periodic parameter [cycles].

Creating parameter:

> Named "super_temperature" with bounds [6.000e+00, 7.000e+00].

> log10(superseding region effective temperature [K]).

Creating parameter:

> Named "mode_frequency" with fixed value 3.140e+02.

> Coordinate frequency of the mode of radiative asymmetry in the

photosphere that is assumed to generate the pulsed signal [Hz].

No parameters supplied... empty subspace created.

Checking likelihood and prior evaluation before commencing sampling...

Not using ``allclose`` function from NumPy.

Using fallback implementation instead.

Checking closeness of likelihood arrays:

-3.1603740790e+04 | -3.1603740790e+04 .....

Closeness evaluated.

Log-likelihood value checks passed on root process.

Checks passed.

Setting channels for event data...

Channels set.

Setting channels for loaded instrument response (sub)matrix...

Channels set.

No parameters supplied... empty subspace created.

Creating parameter:

> Named "phase_shift" with fixed value 0.000e+00.

> The phase shift for the signal, a periodic parameter [cycles].

Creating parameter:

> Named "frequency" with fixed value 3.140e+02.

> Spin frequency [Hz].

Creating parameter:

> Named "mass" with bounds [1.000e+00, 1.600e+00].

> Gravitational mass [solar masses].

Creating parameter:

> Named "radius" with bounds [1.000e+01, 1.300e+01].

> Coordinate equatorial radius [km].

Creating parameter:

> Named "distance" with bounds [5.000e-01, 2.000e+00].

> Earth distance [kpc].

Creating parameter:

> Named "cos_inclination" with bounds [0.000e+00, 1.000e+00].

> Cosine of Earth inclination to rotation axis.

Creating parameter:

> Named "super_colatitude" with bounds [1.000e-03, 1.570e+00].

> The colatitude of the centre of the superseding region [radians].

Creating parameter:

> Named "super_radius" with bounds [1.000e-03, 1.570e+00].

> The angular radius of the (circular) superseding region [radians].

Creating parameter:

> Named "phase_shift" with bounds [-2.500e-01, 7.500e-01].

> The phase of the hot region, a periodic parameter [cycles].

Creating parameter:

> Named "super_temperature" with bounds [6.000e+00, 7.000e+00].

> log10(superseding region effective temperature [K]).

Creating parameter:

> Named "mode_frequency" with fixed value 3.140e+02.

> Coordinate frequency of the mode of radiative asymmetry in the

photosphere that is assumed to generate the pulsed signal [Hz].

No parameters supplied... empty subspace created.

Checking likelihood and prior evaluation before commencing sampling...

Not using ``allclose`` function from NumPy.

Using fallback implementation instead.

Checking closeness of likelihood arrays:

-3.1603740790e+04 | -3.1603740790e+04 .....

Closeness evaluated.

Log-likelihood value checks passed on root process.

Checks passed.

Importance sampling commencing...

Cross-checking parameter names...

Loading samples...

Importance sample fraction = 84.817%.

Distributing workload amongst 1 MPI processes...

Renormalising weights...

Writing to disk...

Restoring likelihood-object parameter update protocol attributes...

Importance sampling completed.

This will create exactly the same importance sampled output file as the prior-change example above.

Recompute the evidence after importance sampling

Evidence is then scaled as: \(Z_1 = Z_0 \times \sum_i w_i\) where \(Z_0\) is the `old’ evidence (before importance sampling), \(Z_1\) will be your new one, with \(\sum w_i\) being the normalization constant

Equivalently, in log-scale: \(\ln Z_1 = \ln Z_0 + \ln (\sum w_i)\)