Piecewise Polytropic Prior Example

The main purpose of this code is to demonstrate how one can fix the prior-likelihood to a constant value and use multinest to sample the prior space log-uniformly. Within this script, one can use the PosteriorAnalysis.pyscript to generate the prior axuiliary data and the prior 95% confidence intervals.

The following block of code will properly import NEoST and its prerequisites, furthermore it also defines a name for the inference run, this name is what will be prefixed to all of NEoST’s output files.

The machinary used within this script and the explanation of them is identical to those found in the Piecewise Polytropic, Speed of Sound, and Tabulated Examples.

[1]:

import neost

from neost.eos import polytropes

from neost.Prior import Prior

from neost.Star import Star

from neost.Likelihood import Likelihood

from neost import PosteriorAnalysis

from scipy.stats import multivariate_normal

from scipy.stats import gaussian_kde

import numpy as np

import matplotlib

from matplotlib import pyplot

from pymultinest.solve import solve

import time

import os

#if not os.path.exists("chains"):

# os.mkdir("chains")

import neost.global_imports as global_imports

# Some physical constants

c = global_imports._c

G = global_imports._G

Msun = global_imports._M_s

pi = global_imports._pi

rho_ns = global_imports._rhons

# Define name for run

run_name = "prior-hebeler-pp-"

directory = '../../examples/chains'

using c code

Below, we define the piecewise polytropic equation of state model, import the J0740 likelihood function, and set the variable paramaeters with their respective prior space intervals.

[2]:

# We're exploring a polytropic (P) EoS parametrization with a chiral effective field theory (CEFT) parametrization based on Hebeler's work

# Transition between CS parametrisation and CEFT parametrization occurs at 1.1*saturation density

polytropes_pp = polytropes.PolytropicEoS(crust = 'ceft-Hebeler', rho_t = 1.1*rho_ns)

# Create the likelihoods for the individual measurements

mass_radius_j0740 = np.load('../../examples/j0740.npy').T

J0740_LL = gaussian_kde(mass_radius_j0740)

# Pass the likelihoods to the solver

likelihood_functions = [J0740_LL.pdf]

likelihood_params = [['Mass', 'Radius']]

# Define whether event is GW or not and define number of stars/events

chirp_mass = [None]

number_stars = len(chirp_mass)

# Define variable parameters, same prior as previous papers of Raaijmakers et al

variable_params={'ceft':[polytropes_pp.min_norm, polytropes_pp.max_norm],'gamma1':[1.,4.5],'gamma2':[0.,8.],'gamma3':[0.5,8.],'rho_t1':[1.5,8.3],'rho_t2':[1.5,8.3]}

Similar to the posterior analysis tutorials, we define the static parameters (which there are none in this example), define and update the variable parameters for each source (one for this case), and define the prior object. Additionally, we define a prior loglike function, which defines a constant prior log-likelihood value for each sample drawn of each parameter (pseudo_var). This is defined in this way such that each sample has an equal prior likelihood of being drawn, thus we have a

suffciently uniform and non-informative prior for each parameter in the equation of state.

[3]:

# Define static parameters, empty dict because all params are variable

static_params={}

for i in range(number_stars):

variable_params.update({'rhoc_' + str(i+1):[14.6, 16]})

# Define prior

prior = Prior(polytropes_pp, variable_params, static_params, chirp_mass)

print("Bounds of prior are")

print(variable_params)

print("number of parameters is %d" %len(variable_params))

def loglike(pseudo_var):

return 1.

Bounds of prior are

{'ceft': [1.676, 2.814], 'gamma1': [1.0, 4.5], 'gamma2': [0.0, 8.0], 'gamma3': [0.5, 8.0], 'rho_t1': [1.5, 8.3], 'rho_t2': [1.5, 8.3], 'rhoc_1': [14.6, 16]}

number of parameters is 7

Here, we implement multinest to uniformly sample the prior space and for each sample from the prior that it draws it assigns a constant prior log-likelihood value of 1. Note, the greatly increased number of livepoints. This is required because each livepoint terminates after 1 iteration.

[5]:

start = time.time()

result = solve(LogLikelihood=loglike, Prior=prior.inverse_sample, n_live_points=10000, evidence_tolerance=0.1,

n_dims=len(variable_params), sampling_efficiency=0.8, outputfiles_basename=f'{directory}/{run_name}', verbose=True, resume=False)

end = time.time()

print(end - start)

analysing data from ../../examples/chains/prior-hebeler-pp-.txt

1308.548851966858

[6]:

# Compute auxiliary data for posterior analysis

PosteriorAnalysis.compute_auxiliary_data(directory, polytropes_pp, variable_params, static_params, chirp_mass, identifier=run_name)

# Make some analysis plots

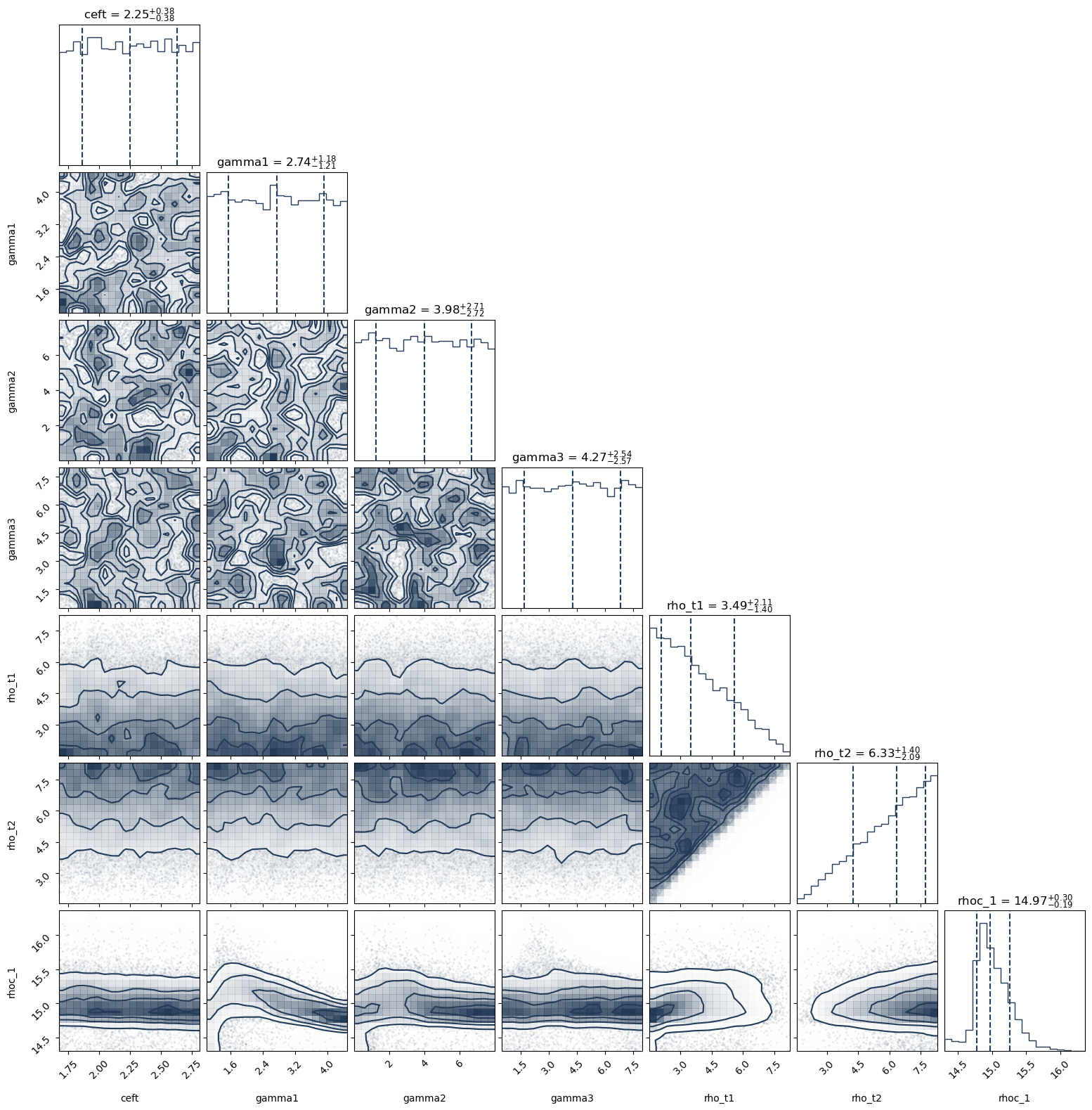

PosteriorAnalysis.cornerplot(directory, variable_params, identifier=run_name)

PosteriorAnalysis.mass_radius_posterior_plot(directory, identifier=run_name)

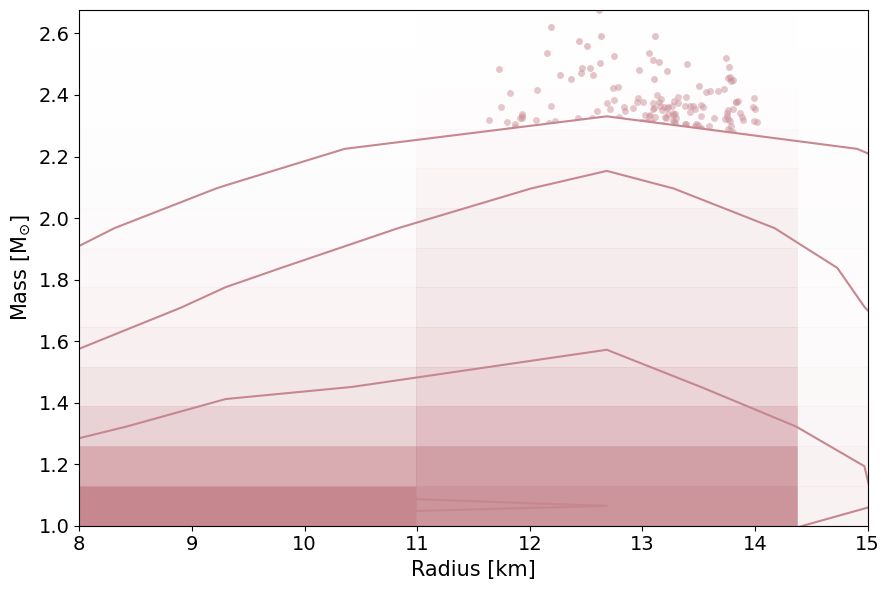

PosteriorAnalysis.mass_radius_prior_predictive_plot(directory, variable_params, identifier=run_name, label_name='+ J0740 dataset')

PosteriorAnalysis.eos_posterior_plot(directory, variable_params, identifier=run_name)

Total number of samples is 10000

|████████████████████████████████████████| 10000/10000 [100%] in 41:23.6 (4.03/s)

sample too small for 7758824319047053.00

sample too small for 8443657568728348.00

sample too small for 9188937680019554.00

sample too small for 10000000000000000.00

sample too small for 6019935481383572.00

sample too small for 6551285568595496.00

sample too small for 7129535313794352.00

sample too small for 7758824319047053.00

sample too small for 8443657568728348.00

sample too small for 9188937680019554.00

sample too small for 10000000000000000.00

sample too small for 2.46

sample too small for 2.51

sample too small for 2.57

sample too small for 2.62

sample too small for 2.68

sample too small for 2.73

sample too small for 2.79

sample too small for 2.84

sample too small for 2.90